Data. The basis of modern marketing. On the one hand, it opens up an ocean of possibilities to optimize marketing activities, and on the other hand, it generates a large amount of repetitive and irritating work, e.g. transferring and integrating data from various marketing sources.

If you want to become a data-driven marketer, you need to know the methods of migration and integration of marketing data from various sources. In our opinion, this is one of the key skills that you will learn if you want to rise to the heights of data-driven marketing.

Google Data Transfer Service may prove very useful in helping you achieve this goal. In this article, we’ll show you what it can do for you and how it works.

Why move data from place to place?

Data overload is a huge problem.

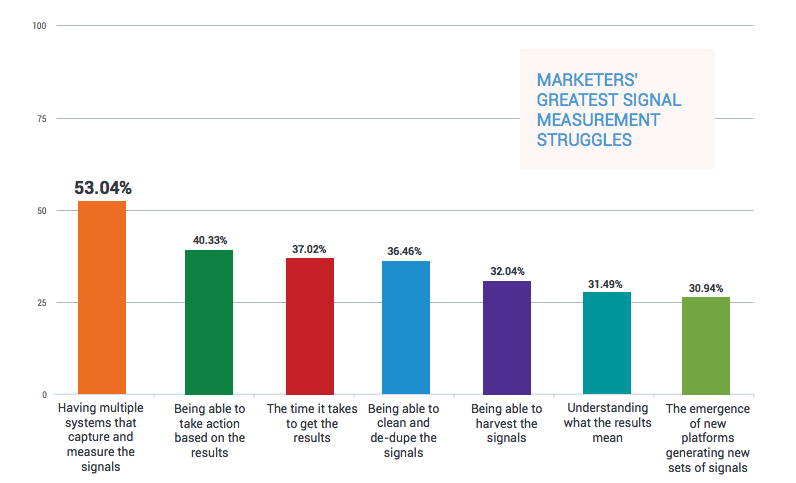

As revealed in the Origami Logic’s 2016 Marketing Signals Report, having multiple systems that capture and measure the marketing signals is the number one measurement struggle for marketers.

Lack of a single source of truth in marketing means that you need to deal with many half-truths. There’s no one instance that clearly tells you what’s working and what’s not, because individual marketing tools are working in their best interest and don’t reconcile their information with each other. Technically, if someone converts on your website after clicking a Google Ads ad on Monday, Instagram ad on Thursday, and LinkedIn ad on Friday, every channel will claim the conversion. If you see 5 conversions on every ad platform, but only 5 leads in your CRM system, that’s really confusing.

The true negative consequence of the above is that it makes you very suspicious of your results and it slows you down in taking action. That’s why if you need to act with confidence, it’s better to have a single source of truth, or a central database of the marketing data. Let’s talk about the upsides of this approach.

The first upside of having all marketing data in one place is a unique insight into your business. Contrary to the isolated channel and tool dashboards, where you don’t have much influence over metrics and the way they are calculated, custom dashboards based on integrated marketing data are completely under your control. The way your metrics are calculated, including bounce rate, churn rate, conversion rate or cart value, is up to you. That way you can also build the true attribution model that will show you the true chunk of the conversion journeys that should be attributed to each channel and format.

The second significant advantage of building a central marketing database is that you increase control over historical data and reduce the risk of losing valuable data (many marketing platforms only store your data for a limited number of months). In addition, you get access to advanced statistical analysis methods, including very deep customer segmentation. Dedicated segments can be used to improve your campaigns on Facebook or Google Ads, LinkedIn Ads, or any other network which lets you use custom audiences. Imagine being able to fire a campaign to a custom audience of users who interacted with your e-mail marketing, chatbot, offline promotion, and newsletters!

Besides, if you choose BigQuery to store your marketing data, you increase the stability and speed of Data Studio reports. This is because the data does not have to be retrieved from the API of the source datasets via Data Studio connectors every time you try to display a new chart. After all, the necessary data is already stored in the BigQuery tables (e.g. as a result of the Google Data Transfer Service).

Finally, the state-of-the-art version of marketing data integration – customer data platforms – give you the ability to talk to your users real-time across multiple channels. However, while not all businesses can afford the luxury of real-time customer data platforms, simple data integration is something anyone can do. Let’s explore the tool that can help you build this single source of marketing truth – BigQuery Data Transfer Service.

The technical side of BigQuery Data Transfer Service

Let’s now describe the technical background of BigQuery Data Transfer Service. This may be a boring part and feel free to skip it, but we love to get a bit deeper, so let’s go!

To fully understand what BigQuery Data Transfer service is, let us introduce you to two acronyms: ETL and ELT. They are two approaches to data processing. The purpose of both ETL and ELT is to obtain data for databases and further for Business Intelligence. Each of these processes includes three steps: data extraction, transformation, and load. The difference between the two approaches is the stage at which the data is transformed. Fivetran made a great job of comparing the two approaches.

In the case of ETL, data is transformed in the second step of the process – immediately after obtaining it from the source, and before loading it into the target database.

In turn, the ELT approach assumes that data transformation is the last step of the process. This means that we actually load raw data into the target database. It’s the analyst who’s responsible for transforming the data in such a way that will help you answer the marketing question at hand.

In recent years, we see that the ELT process is increasingly replacing the ETL process. Charles Wang (Fivetran) called the displacement of ETL by ELT a promise of analysis, not paralysis. This sentence contains all the value that comes from putting the ELT process above ETL, which can be important to the modern marketer. Here’s what we mean.

Advantages of ELT

What makes the ELT approach better for data-driven marketing?

First, you can immediately notice that moving data transformation to the last step reduces the latency, or the time needed for the data extracted from the source data set to appear in the target database.

The ELT approach is also more flexible and hence better adapted to the current reality. But that needs a bit of historical background.

When ETL experienced a boom, storage was expensive, so care was taken to ensure that only well-processed and prepared data was sent to the target database, thus saving on storage costs. The ETL processes were also relatively inflexible as it was the sole responsibility of the IT department to properly program the second step of the process. And as we all know, IT departments often aren’t very attentive to what the marketing team says. As you can guess, this often meant that marketers had to work with data that was not all that useful, even though it came from a variety of marketing sources. Nowadays, the cost of data storage is relatively low and keeps falling. At the same time, the value of time keeps growing. So does the value of insights with a potential to improve the marketing effectiveness.

ELT approach gives data-driven marketers complete control over how their data is being transformed. Ability to control the data transformation step means the ability to control bias. Google Data Transfer, as an example of the ELT process, allows you to transform and wrangle data however you want by running the SQL queries on data collected in the target BigQuery database. What’s more, you can also create your metrics, and dig really deep into the marketing engine. That’s the level of control over marketing data you’re looking for.

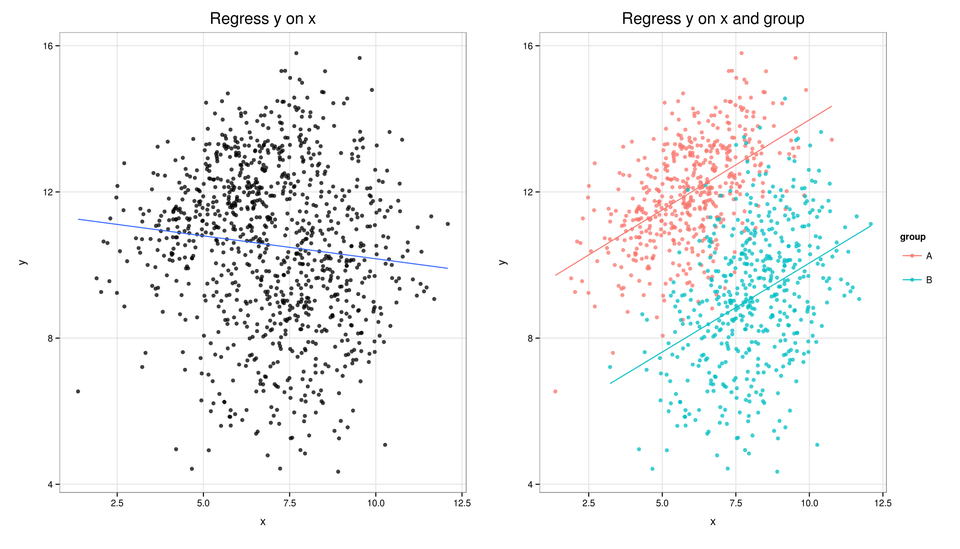

To wrap up this section, let’s take just one example of activity that introduces a lot of bias and that you can control: overly data aggregation. Overly aggregation may lead to conclusions that have nothing to do with the reality described by the data. Among the most well-known is the Simpson’s Paradox, when the aggregation of the two datasets leads to the reversal of the trend in the constituent data sets, as shown below (the image comes from a very interesting discussion on Reddit about the Simpson’s Paradox).

The Simpson Paradox is very common in marketing. In 2021 we still see a lot of marketers making decisions, taking action, and spending money based solely on the marketing reports built out of the aggregated data, in Google Analytics for example. In a complex marketing endeavour, doing so can be a fatal error. In general, the best protection against falling into the Simpson Paradox is to stop drawing conclusions on the basis of aggregated data. It’s safe to assume that such data contains a lot of bias and simply dig deeper.

We’ve dealt with the technical aspects of how Google Data Transfer Service works, so let’s talk about the data sources that this service can help you access.

The practical side of BigQuery Data Transfer Service

BigQuery Data Transfer Service is an ELT tool automates the transfer of data from various marketing sources to BigQuery. Everything is done per the idea of no-code automation and does not require programming knowledge from you. You can interface with this service via API, Google Cloud Platform panel, and terminal. Once set up and configured, data transfer automatically and regularly transfers data from the selected data sets to BigQuery. The service also allows for the transfer of historical data. In the current version of BigQuery Data Transfer Service, however, it is not possible to transfer data from BigQuery outside, i.e. to other databases.

Which tools can you sync data from?

Below is a giant list of all tools you can sync data from using BigQuery Data Transfer. It’s broken down into advertising tools, analytical tools, database technologies, and the rest of martech (conversion optimization, marketing automation, etc.). Guaranteed you’ll recognize a lot of tools you use on regularly.

Advertising tools:

- Adform

- AdRoll

- Apple Search Ads

- Bing Ads

- Criteo

- DoubleClick Campaign Manager (DCM)

- Facebook Ads

- Facebook Ad Insights

- Facebook Page Insights

- Google Ad Manager

- Google Ads

- Google Campaign Manager

- Google Merchant Center

- Google My Business

- Google Search Ads 360

- Google Search Console

- Instagram Insights

- LinkedIn Ad Analytics

- LinkedIn Ads

- LinkedIn Company Pages

- Microsoft Advertising

- Outbrain

- Pinterest Ads

- Quora Ads

- Search Ads 360

- Snapchat Ads

- Snapchat Marketing

- Taboola

- Twitter Ads

- Verizon Media Native Ads

Ad publisher tools:

- DoubleClick for Publishers

- Google AdSense

Analytics:

- Adobe Analytics

- Amplitude

- AppsFlyer

- Google Analytics i GA360

- Mixpanel

- NetSuite SuiteAnalytics

- Segment

- Snowplow

- Yahoo Gemini (Yahoo Search Marketing)

- Yandex Direct

- Youtube Content Owner

Marketing and e-mail automation, e-commerce conversion optimization and customer service:

- Comm100

- Eloqua

- Freshdesk

- Iterable

- Intercom

- Klaviyo

- Mailchimp

- Mandrill

- Marketo

- Optimizely

- Pardot

- Pendo

- Sailthru

- Salesforce

- Salesforce Marketing Cloud

- SalesforceIQ

- SendGrid

- Shopify

- UserVoice

Useful database tools for the data-driven marketer:

- Aurora Postgres

- AWS Lambda

- Dropbox

- FTP

- Google Sheets

- Kinesis

- Magento MySQL

- Webhooks

- Zendesk Chat

Other (selected and not related to marketing):

- Asana

- Apache Kafka

- AWS Lambda

- AWS S3

- Azure Blob Storage

- Braintree

- Jira

Location and pricing of data transfer

Like BigQuery, transfer configuration created in BigQuery Data Transfer Service is attached to a specific place on Earth. However, you only need to remember about setting up the location of the destination table in BigQuery – transfer configuration will copy the location of your target BigQuery location.

Nevertheless, you need to be extra cautious, because config errors may occur if there’s a location mismatch between the region where your data source is located and the destination tables in the BigQuery. In other words, some transfers require colocation. Keep in mind that the sheer majority of data sources will not require colocation.

Locations may seem an abstract topic, but it’s been actually highly practical ever since the GDPR was introduced. There are several rules of the GDPR-compliant transferring of data in the EU that you should keep in mind when choosing your destination table location.

It must not be forgotten that BigQuery (and thus BigQuery Transfer Data Service as well) is not an open-source service but a classic Software-as-a-Service (SaaS). The transfer service itself is free, but you’ll probably need to buy connectors that will grab data from your data sources, especially if you want to sync data from popular tools such as Facebook Ads, Google Ads etc. Unfortunately, after the transfer, BigQuery limits and fees apply. Note that for the majority of companies, the storage costs will be completely marginal.